|

Flutter Engine

The Flutter Engine

|

|

Flutter Engine

The Flutter Engine

|

#include <flow_graph_compiler.h>

Definition at line 215 of file flow_graph_compiler.h.

|

inline |

Definition at line 217 of file flow_graph_compiler.h.

|

inlinevirtual |

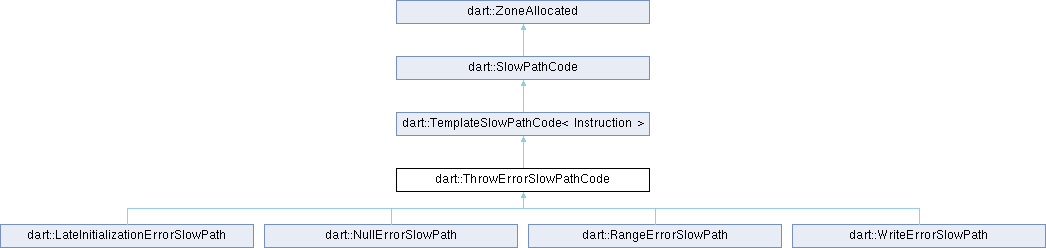

Reimplemented in dart::NullErrorSlowPath.

Definition at line 226 of file flow_graph_compiler.h.

|

inlinevirtual |

Definition at line 225 of file flow_graph_compiler.h.

|

virtual |

Implements dart::SlowPathCode.

Definition at line 3091 of file flow_graph_compiler.cc.

|

inlinevirtual |

Reimplemented in dart::RangeErrorSlowPath, dart::WriteErrorSlowPath, dart::LateInitializationErrorSlowPath, and dart::NullErrorSlowPath.

Definition at line 232 of file flow_graph_compiler.h.

|

inlinevirtual |

Reimplemented in dart::RangeErrorSlowPath, dart::WriteErrorSlowPath, and dart::LateInitializationErrorSlowPath.

Definition at line 230 of file flow_graph_compiler.h.

|

pure virtual |

|

inlinevirtual |

Reimplemented in dart::RangeErrorSlowPath, dart::WriteErrorSlowPath, and dart::LateInitializationErrorSlowPath.

Definition at line 227 of file flow_graph_compiler.h.