3077 {

3079 BitVector* all_places =

3081 all_places->SetAll();

3082

3083 BitVector* all_aliased_places = nullptr;

3085 all_aliased_places =

3087 }

3088 const auto& places = aliased_set_->

places();

3089 for (intptr_t

i = 0;

i < places.length();

i++) {

3090 Place* place = places[

i];

3091 if (place->DependsOnInstance()) {

3092 Definition*

instance = place->instance();

3093

3094

3095

3096 if ((all_aliased_places != nullptr) &&

3098 instance->Identity().IsAliased())) {

3099 all_aliased_places->Add(

i);

3100 }

3102

3103

3104

3106 }

3107 } else {

3108 if (all_aliased_places != nullptr) {

3109 all_aliased_places->Add(

i);

3110 }

3111 }

3113 Definition* index = place->index();

3114 if (index != nullptr) {

3115

3116

3117

3118 live_in_[index->GetBlock()->postorder_number()]->Add(

i);

3119 }

3120 }

3121 }

3122

3124 !block_it.

Done(); block_it.Advance()) {

3125 BlockEntryInstr* block = block_it.Current();

3126 const intptr_t postorder_number = block->postorder_number();

3127

3128 BitVector* kill =

kill_[postorder_number];

3129 BitVector* live_in =

live_in_[postorder_number];

3130 BitVector* live_out =

live_out_[postorder_number];

3131

3132 ZoneGrowableArray<Instruction*>* exposed_stores = nullptr;

3133

3134

3135 for (BackwardInstructionIterator instr_it(block); !instr_it.Done();

3136 instr_it.Advance()) {

3137 Instruction* instr = instr_it.Current();

3138

3139 bool is_load = false;

3140 bool is_store = false;

3141 Place place(instr, &is_load, &is_store);

3142 if (place.IsImmutableField()) {

3143

3144 continue;

3145 }

3146

3147

3148 if (is_store) {

3151 CanEliminateStore(instr)) {

3152 if (FLAG_trace_optimization && graph_->

should_print()) {

3153 THR_Print(

"Removing dead store to place %" Pd " in block B%" Pd

3154 "\n",

3156 }

3157 instr_it.RemoveCurrentFromGraph();

3158 }

3159 }

else if (!live_in->Contains(

GetPlaceId(instr))) {

3160

3161

3162 if (exposed_stores == nullptr) {

3163 const intptr_t kMaxExposedStoresInitialSize = 5;

3164 exposed_stores =

new (

zone) ZoneGrowableArray<Instruction*>(

3167 }

3168 exposed_stores->Add(instr);

3169 }

3170

3173 continue;

3174 }

3175

3176 if (instr->IsThrow() || instr->IsReThrow() || instr->IsReturnBase()) {

3177

3178

3179 live_out->CopyFrom(all_places);

3180 }

3181

3182

3184 if (instr->HasUnknownSideEffects() || instr->IsReturnBase()) {

3185

3186

3187 live_in->CopyFrom(all_places);

3188 continue;

3189 } else if (instr->MayThrow()) {

3191

3192

3193 live_in->AddAll(all_aliased_places);

3194 } else {

3195

3196

3197 live_in->CopyFrom(all_places);

3198 }

3199 continue;

3200 }

3201 } else {

3202

3203

3204

3205

3206

3207

3208

3209

3210 if (instr->HasUnknownSideEffects() || instr->CanDeoptimize() ||

3211 instr->MayThrow() || instr->IsReturnBase()) {

3212

3213

3214

3215 live_in->CopyFrom(all_places);

3216 continue;

3217 }

3218 }

3219

3220

3221 Definition* defn = instr->AsDefinition();

3223 const intptr_t alias = aliased_set_->

LookupAliasId(place.ToAlias());

3225 continue;

3226 }

3227 }

3228 exposed_stores_[postorder_number] = exposed_stores;

3229 }

3230 if (FLAG_trace_load_optimization && graph_->

should_print()) {

3233 }

3234 }

intptr_t max_place_id() const

intptr_t LookupAliasId(const Place &alias)

BitVector * GetKilledSet(intptr_t alias)

const ZoneGrowableArray< Place * > & places() const

static CompilerState & Current()

bool should_print() const

BlockIterator postorder_iterator() const

GrowableArray< BitVector * > kill_

GrowableArray< BitVector * > live_out_

GrowableArray< BitVector * > live_in_

static bool IsAllocation(Definition *defn)

static T Minimum(T x, T y)

#define THR_Print(format,...)

static DART_FORCE_INLINE intptr_t GetPlaceId(const Instruction *instr)

static bool IsLoadEliminationCandidate(Instruction *instr)

static constexpr intptr_t kInvalidTryIndex

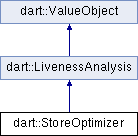

Public Member Functions inherited from dart::LivenessAnalysis

Public Member Functions inherited from dart::LivenessAnalysis Public Member Functions inherited from dart::ValueObject

Public Member Functions inherited from dart::ValueObject Protected Member Functions inherited from dart::LivenessAnalysis

Protected Member Functions inherited from dart::LivenessAnalysis Protected Attributes inherited from dart::LivenessAnalysis

Protected Attributes inherited from dart::LivenessAnalysis