7834 {

7835#if defined(DART_PRECOMPILED_RUNTIME)

7837 return nullptr;

7838#else

7842 return new (

Z) InstanceSerializationCluster(is_canonical,

cid);

7843 }

7845 return new (

Z) TypedDataViewSerializationCluster(

cid);

7846 }

7848 return new (

Z) ExternalTypedDataSerializationCluster(

cid);

7849 }

7851 return new (

Z) TypedDataSerializationCluster(

cid);

7852 }

7853

7854#if !defined(DART_COMPRESSED_POINTERS)

7855

7856

7857

7858

7859

7860

7861

7862

7864 if (

auto const type = ReadOnlyObjectType(

cid)) {

7865 return new (

Z) RODataSerializationCluster(

Z,

type,

cid, is_canonical);

7866 }

7867 }

7868#endif

7869

7870 const bool cluster_represents_canonical_set =

7872

7874 case kClassCid:

7875 return new (

Z) ClassSerializationCluster(num_cids_ + num_tlc_cids_);

7876 case kTypeParametersCid:

7877 return new (

Z) TypeParametersSerializationCluster();

7878 case kTypeArgumentsCid:

7879 return new (

Z) TypeArgumentsSerializationCluster(

7880 is_canonical, cluster_represents_canonical_set);

7881 case kPatchClassCid:

7882 return new (

Z) PatchClassSerializationCluster();

7883 case kFunctionCid:

7884 return new (

Z) FunctionSerializationCluster();

7885 case kClosureDataCid:

7886 return new (

Z) ClosureDataSerializationCluster();

7887 case kFfiTrampolineDataCid:

7888 return new (

Z) FfiTrampolineDataSerializationCluster();

7889 case kFieldCid:

7890 return new (

Z) FieldSerializationCluster();

7891 case kScriptCid:

7892 return new (

Z) ScriptSerializationCluster();

7893 case kLibraryCid:

7894 return new (

Z) LibrarySerializationCluster();

7895 case kNamespaceCid:

7896 return new (

Z) NamespaceSerializationCluster();

7897 case kKernelProgramInfoCid:

7898 return new (

Z) KernelProgramInfoSerializationCluster();

7899 case kCodeCid:

7900 return new (

Z) CodeSerializationCluster(heap_);

7901 case kObjectPoolCid:

7902 return new (

Z) ObjectPoolSerializationCluster();

7903 case kPcDescriptorsCid:

7904 return new (

Z) PcDescriptorsSerializationCluster();

7905 case kCodeSourceMapCid:

7906 return new (

Z) CodeSourceMapSerializationCluster();

7907 case kCompressedStackMapsCid:

7908 return new (

Z) CompressedStackMapsSerializationCluster();

7909 case kExceptionHandlersCid:

7910 return new (

Z) ExceptionHandlersSerializationCluster();

7911 case kContextCid:

7912 return new (

Z) ContextSerializationCluster();

7913 case kContextScopeCid:

7914 return new (

Z) ContextScopeSerializationCluster();

7915 case kUnlinkedCallCid:

7916 return new (

Z) UnlinkedCallSerializationCluster();

7917 case kICDataCid:

7918 return new (

Z) ICDataSerializationCluster();

7919 case kMegamorphicCacheCid:

7920 return new (

Z) MegamorphicCacheSerializationCluster();

7921 case kSubtypeTestCacheCid:

7922 return new (

Z) SubtypeTestCacheSerializationCluster();

7923 case kLoadingUnitCid:

7924 return new (

Z) LoadingUnitSerializationCluster();

7925 case kLanguageErrorCid:

7926 return new (

Z) LanguageErrorSerializationCluster();

7927 case kUnhandledExceptionCid:

7928 return new (

Z) UnhandledExceptionSerializationCluster();

7929 case kLibraryPrefixCid:

7930 return new (

Z) LibraryPrefixSerializationCluster();

7931 case kTypeCid:

7932 return new (

Z) TypeSerializationCluster(is_canonical,

7933 cluster_represents_canonical_set);

7934 case kFunctionTypeCid:

7935 return new (

Z) FunctionTypeSerializationCluster(

7936 is_canonical, cluster_represents_canonical_set);

7937 case kRecordTypeCid:

7938 return new (

Z) RecordTypeSerializationCluster(

7939 is_canonical, cluster_represents_canonical_set);

7940 case kTypeParameterCid:

7941 return new (

Z) TypeParameterSerializationCluster(

7942 is_canonical, cluster_represents_canonical_set);

7943 case kClosureCid:

7944 return new (

Z) ClosureSerializationCluster(is_canonical);

7945 case kMintCid:

7946 return new (

Z) MintSerializationCluster(is_canonical);

7947 case kDoubleCid:

7948 return new (

Z) DoubleSerializationCluster(is_canonical);

7949 case kInt32x4Cid:

7950 case kFloat32x4Cid:

7951 case kFloat64x2Cid:

7952 return new (

Z) Simd128SerializationCluster(

cid, is_canonical);

7953 case kGrowableObjectArrayCid:

7954 return new (

Z) GrowableObjectArraySerializationCluster();

7955 case kRecordCid:

7956 return new (

Z) RecordSerializationCluster(is_canonical);

7957 case kStackTraceCid:

7958 return new (

Z) StackTraceSerializationCluster();

7959 case kRegExpCid:

7960 return new (

Z) RegExpSerializationCluster();

7961 case kWeakPropertyCid:

7962 return new (

Z) WeakPropertySerializationCluster();

7963 case kMapCid:

7964

7966 case kConstMapCid:

7967 return new (

Z) MapSerializationCluster(is_canonical, kConstMapCid);

7968 case kSetCid:

7969

7971 case kConstSetCid:

7972 return new (

Z) SetSerializationCluster(is_canonical, kConstSetCid);

7973 case kArrayCid:

7974 return new (

Z) ArraySerializationCluster(is_canonical, kArrayCid);

7975 case kImmutableArrayCid:

7977 ArraySerializationCluster(is_canonical, kImmutableArrayCid);

7978 case kWeakArrayCid:

7979 return new (

Z) WeakArraySerializationCluster();

7980 case kStringCid:

7981 return new (

Z) StringSerializationCluster(

7982 is_canonical, cluster_represents_canonical_set && !vm_);

7983#define CASE_FFI_CID(name) case kFfi##name##Cid:

7985#undef CASE_FFI_CID

7986 return new (

Z) InstanceSerializationCluster(is_canonical,

cid);

7987 case kDeltaEncodedTypedDataCid:

7988 return new (

Z) DeltaEncodedTypedDataSerializationCluster();

7989 case kWeakSerializationReferenceCid:

7990#if defined(DART_PRECOMPILER)

7992 return new (

Z) WeakSerializationReferenceSerializationCluster();

7993#endif

7994 default:

7995 break;

7996 }

7997

7998

7999

8000 return nullptr;

8001#endif

8002}

#define CASE_FFI_CID(name)

#define CLASS_LIST_FFI_TYPE_MARKER(V)

void Push(ObjectPtr object, intptr_t cid_override=kIllegalCid)

static bool IncludesCode(Kind kind)

IsolateGroup * isolate_group() const

bool IsTypedDataViewClassId(intptr_t index)

bool IsTypedDataClassId(intptr_t index)

bool IsExternalTypedDataClassId(intptr_t index)

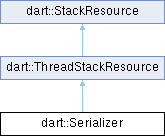

Static Public Member Functions inherited from dart::StackResource

Static Public Member Functions inherited from dart::StackResource